Search for Next Generation

Reimagining Google Search for the Next Generation

00

problem

Our current app is a "Utility," not a "Destination." The data is telling us a very consistent story: for Gen Z and Alpha, the Google app is starting to feel like a legacy tool. It’s functional, but it’s purely transactional. They come in, get a quick answer, and leave. Our research shows they see the experience as redundant and "utilitarian"—it lacks the personality, social connection, and delight they’ve come to expect from every other app they spend time in. Architecturally, we’re also making them work too hard. Our multimodal features like Lens and Voice feel like separate "modes" rather than one fluid experience. For a generation that grew up blending images, text, and video naturally, this fragmented UI creates unnecessary friction. We’re essentially asking them to adapt to our technical silos, rather than building an interface that adapts to their intent. If we don't move past this "passive consumption" model, we’re going to lose the chance to be their primary window to the world.

solution

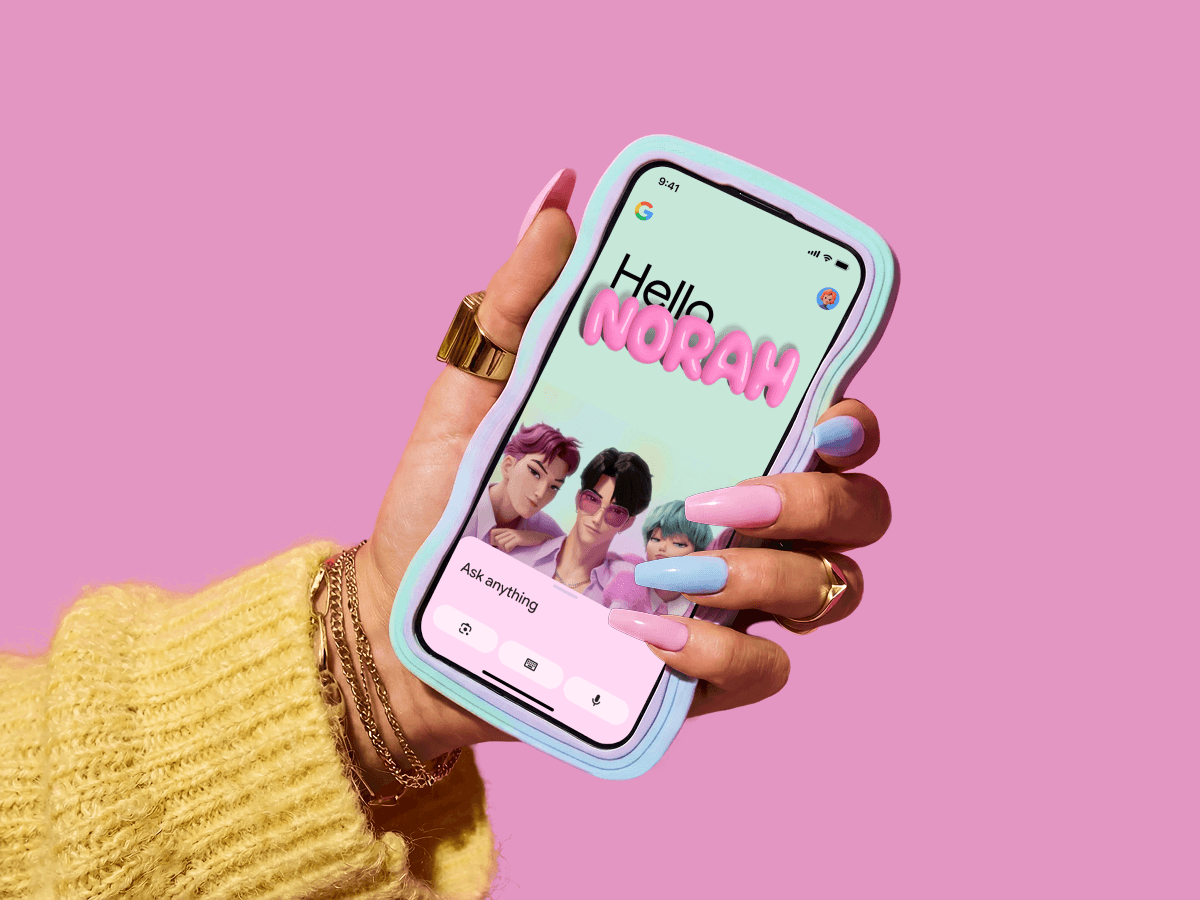

"Making Search Feel Like Home" With this project, we’re shifting the focus from "retrieval" to "expression." The core of this vision is a hyper-customizable architecture that turns the app into a personal playground. We’re moving away from a fixed home screen to one that’s modular and "skin-able"—where users can pin AI-generated widgets, use virtual avatars to represent themselves, and truly own their digital space. We want the app to feel as unique as the person using it, whether they’re a visual learner or someone who prefers high-density text. On the interaction side, we’re cleaning up the mess with "The Single Box." It’s one intelligent entry point that handles any query—voice, image, or text—and lands you in a unified, immersive view. But we’re going beyond just finding info; we’re moving into "agentic" territory. Bloom helps you pick up where you left off on a project, collaborate with friends on a shared vision board, and even handles tasks like auto-buying tickets. It’s about evolving the app from a place where you just "search" into a proactive assistant that helps you get things done, together.

From Architectural Chaos to a Unified Vision

This initiative began with a critical realization: our current architecture was fundamentally misaligned with the mental models of the next generation. We started by auditing our design system and identified a major friction point—our multimodal tools like lens, voice, and AI were functioning as technical silos, creating unnecessary cognitive load. We knew we had to move beyond incremental tweaks and instead pivot toward a structure where personalization acts as the primary anchor for the entire experience.

The turning point came during our cross-functional workshops. By testing early concepts with Gen Alpha panels, we learned that they didn’t just want a faster search tool; they wanted a digital "playground" that reflected their identity and supported social collaboration. This insight drove us to develop a unified input framework and a hyper-customizable home, shifting the app from a passive utility to an expressive, high-agency environment where users can truly own their space.

This vision was never meant to be just a conceptual deck. It served as the foundation for a series of strategic project roadmaps that are currently in active development. We are now in the process of stitching these workstreams together—integrating a new visual language and agentic features into the product. This framework is no longer just a North Star; it is the blueprint we are actively building against to redefine the future of the app.

View the full case study →

year

2025

role

Sole IC Designer (Partnered with 3 Sr Design Directors, 1 Sr Product Director, 2 Data Science & Research)

area

Search Strategy

01

see also